Multi-Task Learning with R-CNN-based Models

Contents

1. Introduction

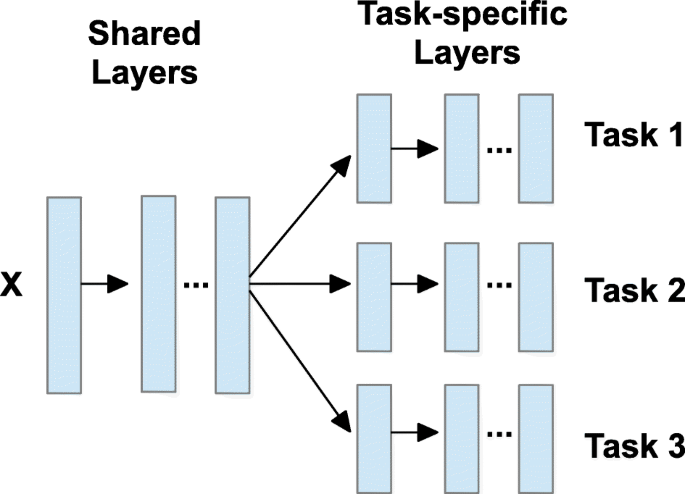

Multi-Task Learning (MTL) is a machine learning technique where a model learns multiple tasks simultaneously, leveraging shared information between them to improve overall performance. In object detection, R-CNN based models can be extended to perform tasks such as classification, detection, segmentation, or keypoint estimation all at once.

MTL helps reduce overfitting, improve generalization, and save resources by sharing features across tasks. This article focuses on how MTL is integrated into R-CNN models to enhance efficiency in computer vision applications.

2. Concept of Multi-Task Learning

Multi-Task Learning is the process of training a model to perform multiple tasks at the same time. Instead of training separate models for each task, MTL exploits the relationships and shared features between tasks, helping the model learn more effectively, reduce overfitting, and increase generalization.

In MTL, tasks usually share most of the network architecture (such as the backbone), but have separate branches for each task to ensure appropriate outputs.

3. Applications in R-CNN

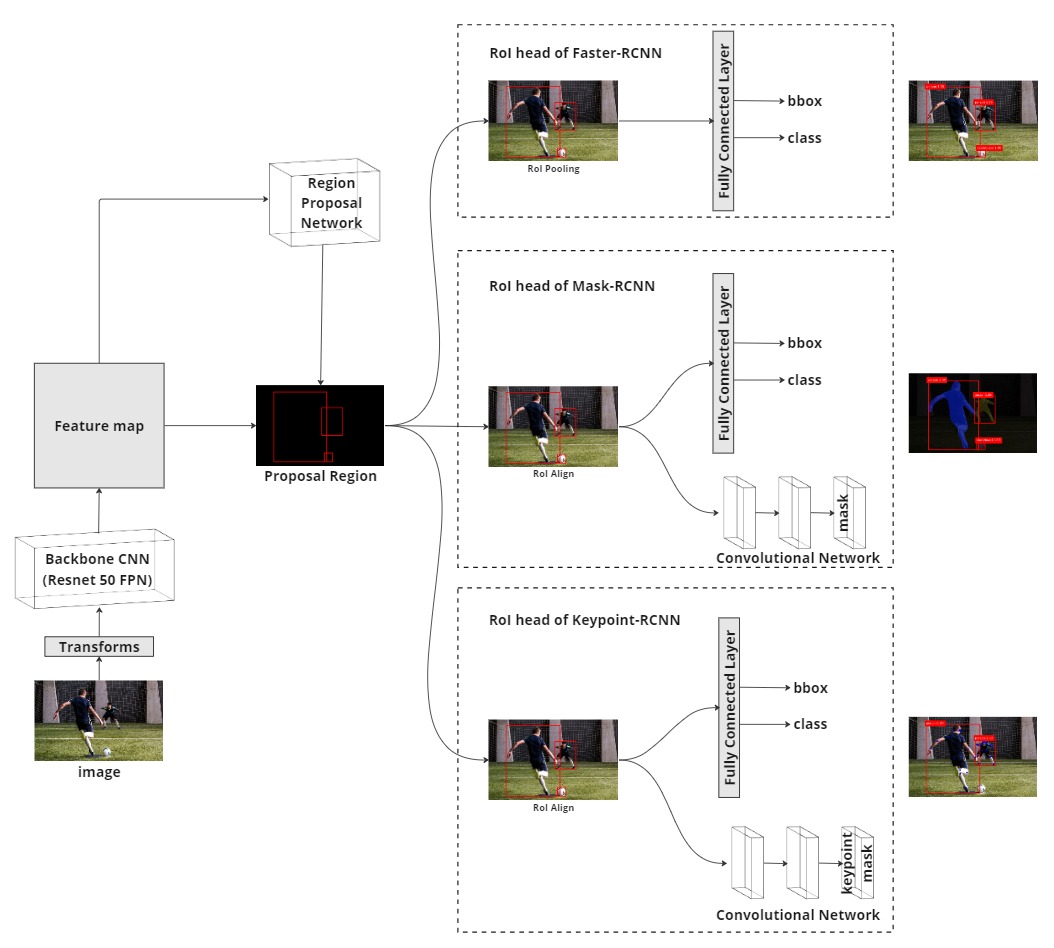

R-CNN models have a two-stage design that is well-suited for Multi-Task Learning:

- Faster R-CNN: focuses on object detection and classification.

- Mask R-CNN: adds pixel-wise segmentation task on top of detection.

- Keypoint R-CNN: further extends to predict keypoints on objects.

This approach shares the feature backbone while adding separate output branches for each task, allowing the model to learn multiple tasks simultaneously within one framework.